Why it’s important

Splitting our dataset into training, validation, and test datasets is vital for ensuring a generalised model that is not overfitted to the problem. By hiding records from the model, we can be sure that a model has understood the patterns within the data and has learnt what to look for. By splitting the data into 3 parts, we can assess performance at multiple stages and feel confident we have identified the most accurate model.

Types of dataset splits

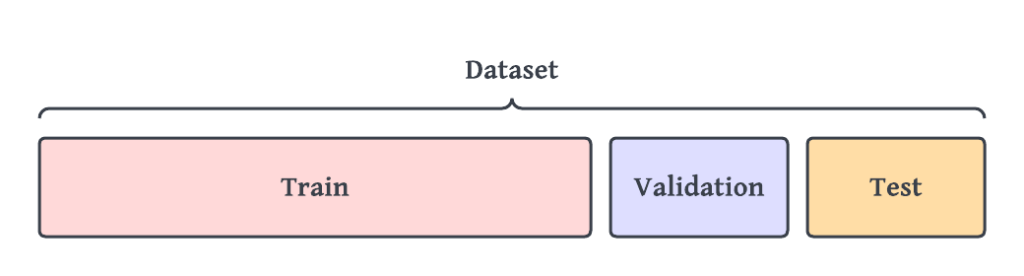

Each of the following dataset splits is a sample of the population dataset. Samples should always be as representative of the population as possible, with minimum bias. Let’s look at the purpose of each dataset:

Train dataset – This split is used to train the model. This is the data that the model sees, and where it learns patterns and relationships from.

Validation dataset – This split is used when comparing the performance of a given model, across different hyperparameter permutations. We are checking here to see how well it has learnt the patterns and relationships from the ‘train’ dataset. This split is re-used when tuning a model and tweaking its hyperparameters. The model sees this data, but does not learn from it.

Test dataset – This split is used to test the performance of multiple finalised models. Here we are assessing which of the tuned models has learnt the patterns and relationships of the data the best. As this is the final step in deciding which model to use, the test set is often curated, ensuring it accurately covers all variances within the population. Again, models only see this data, but do not learn from it.

How to split the data

Now that we are familiar with the types of dataset splits, the next step is to decide what proportion of the population should be assigned to each split.

Typically we would split train, validation, and test as 60%, 20%, and 20%, but this can be adjusted. If we have a simple model with few hyper-parameters, the validation data can be shrunk. Similarly, if we are working with big data (1m+ records), it’s reasonable to reduce both validation and test datasets, provided they continue to cover the variance of the population.

It is also common to use cross-validation, which works well when the population dataset is small. This iteratively trains and validates the model on different “folds” within the training data, that alternate between use as training and validation splits.

There are automated ways of performing a simple train/test split, and cross-validation in sklearn that you can read about here.

In conclusion

Splitting the dataset is a necessary process in the majority of model development projects. We have learnt about why it’s important, the different splits and their purposes, and how we perform the split.

If you have any questions, or ideas for how we can help you with any data science projects, get in touch at hello@harksys.com